This section examines camera perspective; which deals with the visual appearance of spatial objects/scenes captured by photographic and digital cameras. Since camera formats and capabilities cannot be easily separated from the screens used to display camera images, we shall review screen technologies.

Camera perspective is a category of visual/optical/technical perspective, and also a sub-category of instrument perspective. Apart from human vision or visual perspective (2nd type or retinal perspective), camera perspective is how the vast majority of perspective images are produced.

Camera Technology

A camera is an instrument used to capture and record images and videos, either digitally using an electronic sensor, or chemically using photographic film. Cameras are sometimes placed on the back of other optical instruments including telescopes, and microscopes, and/or used in combination with other technologies such as the internet and CAD, GIS, CGI, CV systems, smart-phones, tablets, etc.

The camera dates back to the 19th century and has evolved significantly along with technological advancements, leading to a range of types and models today. Cameras function through combined optical, mechanical, electrical, and electronic components and principles. These include exposure control, which regulates the amount of light reaching the sensor or film; the lens, which focuses the light; the viewfinder, which allows the user to preview the scene; and the film or sensor, which captures the image.

Camera Types

Today, there are several types of cameras, each being used for specific purposes and offering unique capabilities for several applications.

For example, single-lens reflex (SLR, DSLR) cameras provide real-time, exact imaging by focusing light through the primary lens operating as the viewfinder. Large-format and medium-format cameras offer higher image resolution and are used in professional photography. Compact cameras, known for their portability and simplicity of operation, are popular in consumer photography.

Motion picture cameras are specialised for filming cinematic content, while digital cameras, use electronic sensors to capture and store images. Today everyone carries a digital still/motion camera built into their smartphone.

Image Formation

We defined optical perspective <IMAGING CLASS> as capturing/creating an image/view of a spatial reality. Regardless of whether we are talking of natural/visual or artificial perspective, a standard component in this process is the use, or simulation, of an imaging device of one kind or another.

For a real-world perspective image (or representation of physical reality), taken from a specific point-of-view, then we are dealing with the formation of perspective projections. Whereby, the imager can be one of several different kinds of device, for example, an eye, pin-hole camera, lens-based camera, or a graphical drawing technique employed by an artist or computer ray-tracing system, etc.

As explained in the Classic Forms section, fundamental, is that we have a perspective projection procedure in which the rays, or lines of projection, are projected from a range of object/scene field points onto an image plane (normally a 2-D plane). Whereby, perspective projection is a geometrical procedure, no matter if the image is formed using graphical or optical methods; and because to form a sharp image, the real or simulated light rays must be ‘sorted’ out from their natural confusion in which light rays are reflected in every possible direction.

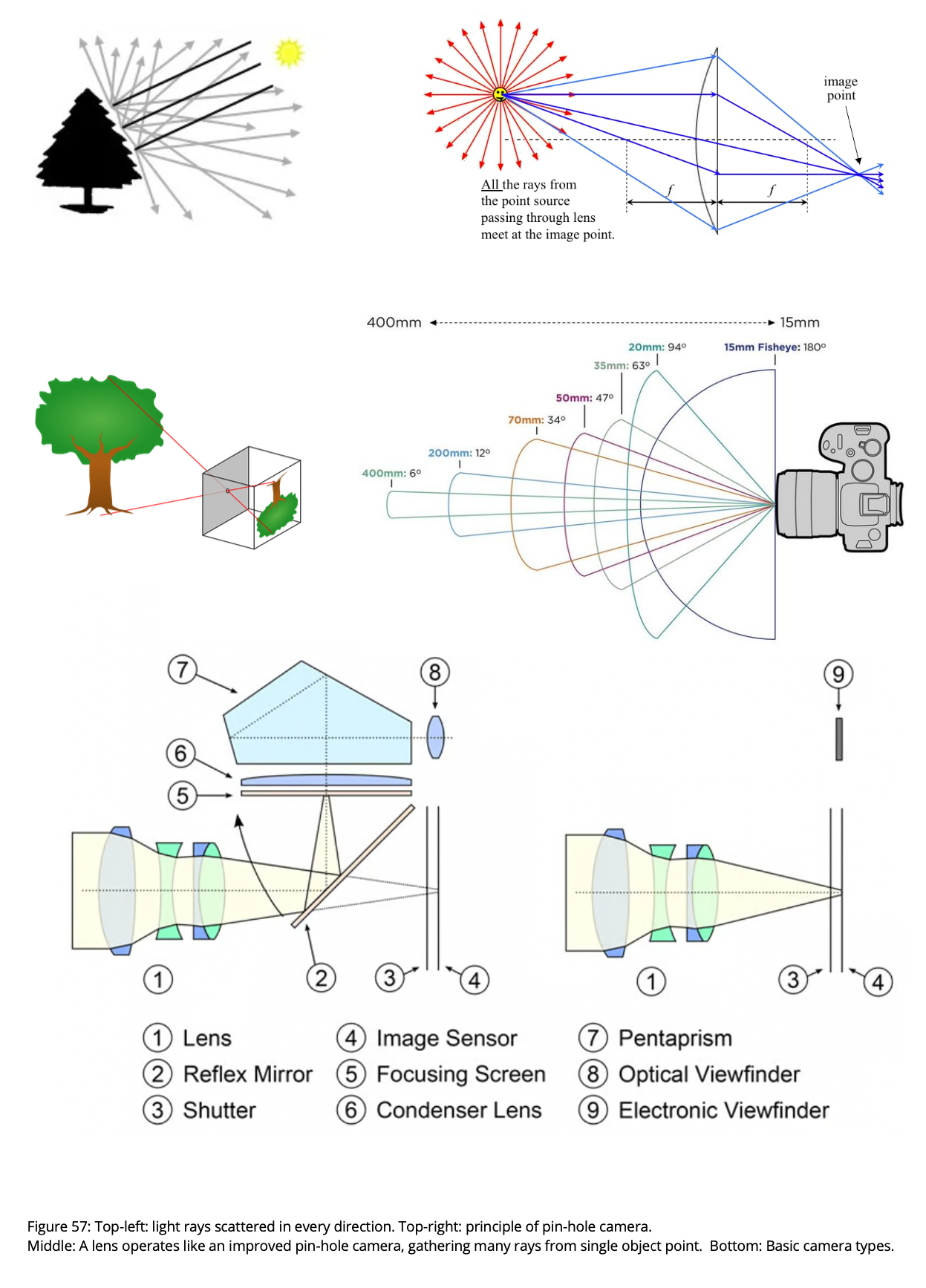

The theory of geometrical imaging explains that each visible object point present in the scene must have all (captured) rays that emanate from that point focussed onto a single image point in the picture plane, and the result is a well-defined image of the object/scene. For this to happen, without a lens, the rays must all pass through the same spatial point in front of a projection screen. This is the principle of a pin-hole camera. The pin-hole operates as a ray-sorter, and the size of the resulting image depends only on the distance of the pin-hole from the image plane.

When the camera has a lens, then the purpose (of this lens) is to sort rays emanating from each object point, and once again to focus these rays to corresponding image point(s); however now each object point is the source of many more rays which pass through the larger lens aperture, allowing for a brighter image which has a higher contrast between bright and dark regions.

Camera Lens

A camera lens has other functions aside from a simple requirement to improve light-gathering power or image brightness.

Lens features include:

- Aperture: most modern lenses allow the size of the lens aperture to be adjusted to change the intensity of light reaching the image plane.

- Focal Length and Field-of-view: Focal length is the distance from the centre of the lens to the image sensor, or focal plane. A shorter focal length results in a wider field-of-view, while a longer focal length results in a narrower field-of-view. For example, a 20 mm lens is a wide-angle lens, while a 500 mm lens is a telephoto lens.

- Magnification: Longer focal length lenses make distant objects appear larger.

- Lens type: Prime lenses, or fixed focal length lenses, are typically more compact and lightweight than zoom lenses or variable focal length lenses. They also tend to have a larger maximum aperture, used in low-light conditions.

- Camera sensor: The relationship between focal length and field-of-view is also dependent upon the size of the camera’s sensor. A cropped sensor image can be 1.5–1.6 times smaller than a full-frame sensor image, so an 18 mm lens on a full-frame sensor can act like a 27–28 mm lens in terms of field-of-view.

Aside from mirrors, cameras are the most basic and common type of perspective instrument, accordingly, it is useful to explore camera functions in detail.

Image Aspect Ratio

An important factor for a ‘central perspective’ camera is the image aspect ratio. This refers to the rectangular shape, or proportions, of the image as projected onto the picture plane, being the ratio of its width to its height, expressed as a ratio of 2 integers, such as 4:3, or in a decimal format, such as 1.33:1 or simply 1.33.

Different aspect ratios provide different aesthetic effects for the human viewer, and standards have been developed which have varied over time. During the silent movie era, aspect ratios varied, from square 1:1, all the way up to the extreme widescreen 4:1 named Polyvision. However, around the year 1910, motion pictures settled on the ratio of 4:3 (1.33). Whereby, the next big change in aspect ratios did not occur until the 1950s, as instigated by the popularity of the three-projector Cinerama system with a ratio of 2.65:1. Later, other widescreen ratios provided cinematographers with a broader film frame to compose their images.

Many different photographic systems were used in the 1950s to create widescreen movies, but one dominated: the anamorphic process, which used anamorphic or ‘scope aspect’ lenses to optically squeeze the (horizontal aspect) image onto an ordinary-sized film-gauge frame or format (normally 36mm by 24 mm), and thus to optically capture or photograph twice the horizontal area relative to the vertical as standard “spherical” or ‘flat aspect’ lenses.

Anamorphic lenses are still in use today for the same purpose. The first commonly used anamorphic format was CinemaScope, which used a 2.35 aspect ratio, although it was originally 2.55. CinemaScope was used from 1953 to 1967, but after that time changes to latter-day projection standards altered the aspect ratio ratio from 2.35 to 2.39.

It is important to realise that changes to the aspect ratio of anamorphic 35 mm photography are specific to camera or projector gate-size/aperture, a basic limitation for optical system design. After the “widescreen wars” of the 1950s ended, the motion picture industry settled on 1.85 as a standard for theatrical film projection. Europe and Asia opted for 1.66 early on, although 1.85 dominates today (for both film and digital formats as projected in theatres). Certain “epic” movies still use the anamorphic 2.39.

In the 1990s, with the advent of high-definition video, television used the 1.78 (16:9) ratio as a compromise between the theatrical standard of 1.85 and television’s 1.33, as it was not practical to produce a traditional CRT television tube with a width of 1.85. Until that change, nothing had been filmed in 1.78, however today, this is the standard for high-definition video and also for widescreen television, plus digital film formats as well.

Lens Choice and Perspective

As you may have realised, professional camera lenses are often interchangeable, or swapped on and off a single camera body, for use in still and motion picture photography. Changing lenses enables control of the nature and form of optical images captured by a camera, as explained below.

To begin, lens focal length is a fundamental determinant in the magnification of the image, and hence field-of-view as projected onto the sensor or photographic film. A longer focal length gives greater magnification and lower field-of-view, whilst short focal lenses provide lower magnification and wider field-of-view.

But how does lens focal length affect perspective form (geometrical image form)? Common opposing misconception(s) is that changing the focal length of the lens used, will either: A) always change the perspective captured in an image, or B) never change the perspective captured in an image! However, the situation is complex and has several factors that come into play. The answer to this question depends upon how you define perspective form, and relates to scene field-of-view, depth-of-field, perspective aspect and shape distortions introduced, plus lens distortions, etc. Let us now consider some scenarios.

If you take a photograph with a 35 mm focal length lens, and then one using a 55 mm lens, comparing the two images may at first give the impression that the perspective of the image is largely unchanged, apart from magnification and hence field-of-view. Taking a closer look at the image however, reveals that the shape of the spatial object/scene has undergone a complete change in outline shape.

As explained earlier the scale/shape/size problem does mean that image shape changes with image scale (ref. outline detail). This is a subtle point often misunderstood by some who claim that the two lenses produce the same perspective distortions and image shape, because if you scale the captured images to the same size then they can sometimes appear nearly identical. However, by scaling you have always adjusted the appearance/length of outline shapes (changed the outline detail, or ‘jaggedness’ appearance of scene/object outlines)! So we can conclude that lenses with different focal lengths project images with different image forms/shapes!

And other noticeable visual changes are apparent between lenses of different focal lengths, including those associated with spatial scale and geometrical distortions in image space; leading to (for example) different apparent motion speeds for both camera movements and scene/object motions, etc.

Changing the lens focal length on a camera can change the perspective of a photograph, but it’s not the only factor that affects perspective:

- Field-of-view: Changing the lens changes the field of view, but it may not change the perspective dramatically if the camera position doesn’t change.

- Distance from subject: Changing the distance between the camera and the subject changes the perspective.

- Angle-of-view: The angle at which the camera is pointed can change the perspective.

- Lens type: Different lenses can change the perspective in different ways: Wide-angle lenses: make objects appear further apart, and can create an illusion of depth. Longer lenses: magnify everything in the field-of-view, which can make distant objects appear closer.

Photographic Innovations

Cinema and photography are optical media enabled and limited by the perspective principles, methods, types, and also the instruments employed to capture and display accurate images of physical reality.

For over 100 years there has been a steady improvement in optical perspective-related technology, resulting in today’s remarkably life-life images, whereby in many cases, the images go far beyond the capabilities of the human eye, and in terms of image clarity, resolution, field-of-view, etc., plus in terms of the efficiency, effectiveness of perspective images that can be captured, transported, and presented, etc. Let us now take a brief survey of these innovations.

Film Format / Gauge

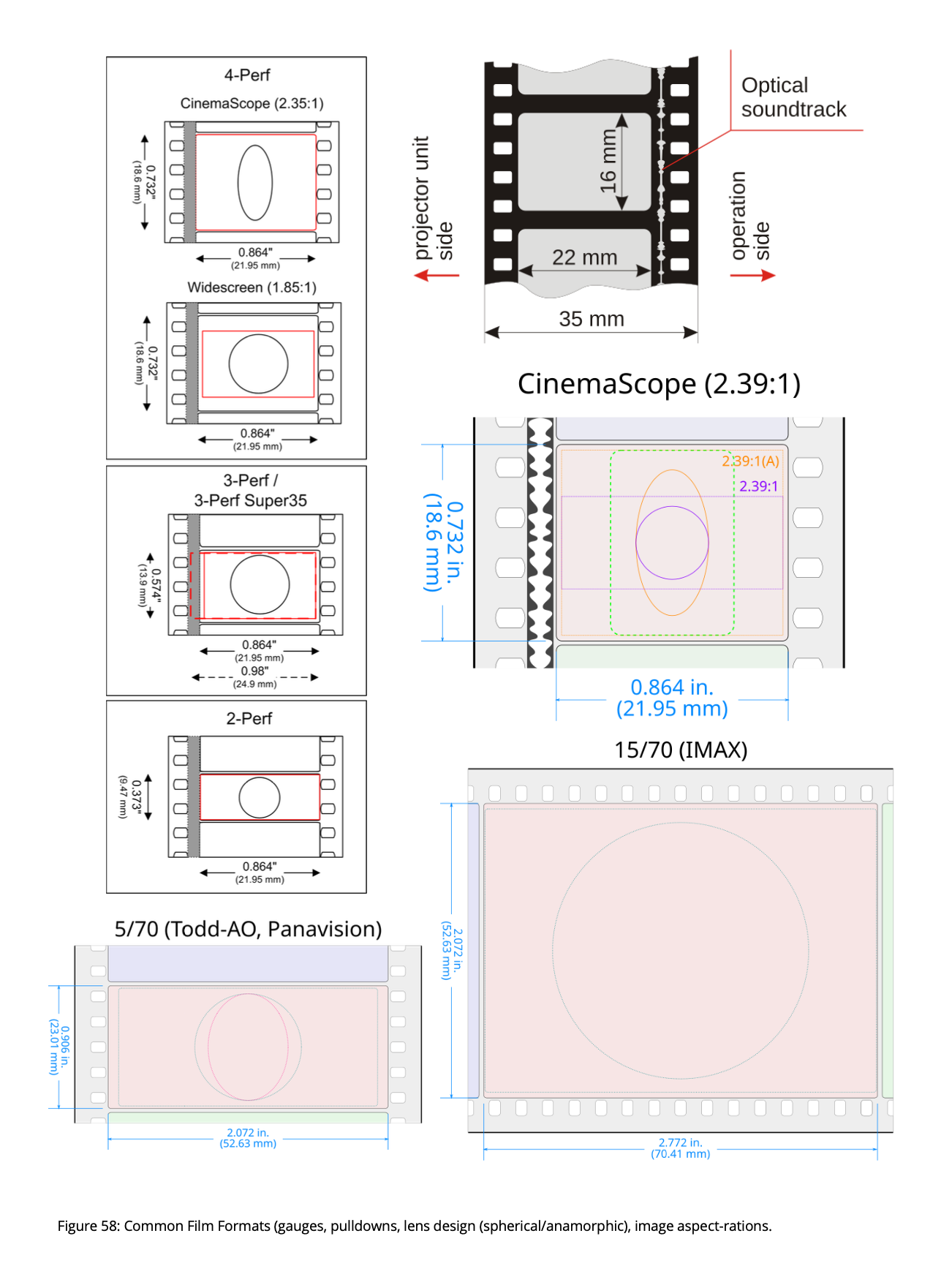

Film format refers to a set of standard characteristics regarding image capture on photographic film. Important characteristics include the film gauge, negative pulldown, lens design (spherical or anamorphic), and camera/projector gate, plus aspect-ratio or optically projected dimensions of the image.

Film gauge is a physical property of photographic or motion picture film which defines its width. Traditionally, the major movie film gauges are 8 mm, 16 mm, 35 mm, and 65/70 mm. There have been other gauges, for example in the silent era, most notably 9.5 mm film, and more recently others ranging from 3 mm to 75 mm. Notably, a larger film gauge is associated with (potentially) improved image quality and higher image detail, but also with greater materials expense, heavier camera equipment, larger and costly projection equipment, plus greater bulk and weight for film distribution and storage.

Negative Image / Pulldown

Negative pulldown is how a photographic image is exposed onto the film, and is identified by the number of film perforations spanned by an individual frame. It also relates to the orientation of the image on the negative, being either horizontal or vertical orientation. Whereby using a camera that changes the number of exposed perforations allows a cinematographer to change both the aspect ratio of the image and the size of the exposed area on the film stock (which affects image quality, and resolution or clarity).

The most common negative pulldown formats for 35 mm film are 4-perf and 3-perf, the latter of which is usually used in conjunction with Super 35. Vertical pulldown is most common, although horizontal pulldown is used in IMAX, VistaVision, 35 mm still cameras. For cinema applications, striking is how choice of film format and gauge, and in particular the image size and aspect ratio of the image that is projected onto the cinema screen, affect the overall feeling of realism, and 3-D or spatial immersion.

Widescreen Movies

In terms of film gauge, 35 mm is the standard gauge most commonly used in still and motion photography. Where film gauge refers to the approximate width of the photographic film, which can be exposed in several different ways by the camera gate to produce different optical image sizes and/or aspect ratios. When used for vertical film pulldown the negative typically has a 1.377 aspect ratio.

However, so-called ‘widescreen’ 35 mm negative exposures are possible by using an anamorphic format. Here an anamorphic lens is used on the camera and projector to produce a wider image, with an aspect ratio of about 2.39:1 (commonly referred to as 2.40:1). The image, as recorded on the negative and the print that is projected in cinema theatres, are horizontally compressed (squeezed) by a factor of 2. One such example is CinemaScope which was used from 1953 to 1967 for shooting widescreen films that, crucially, could be screened in theatres using existing equipment, albeit with an anamorphic lens adapter.

Cinerama is a different widescreen process that originally projected images simultaneously from three synchronized 35mm projectors onto a large, deeply curved screen. This system involved shooting with three 35 mm cameras sharing a single shutter. However, the process was later abandoned for a system using a single camera and a 70mm film gauge. The latter system lost the 146-degree captured field of view of the original three-strip system, and its resolution (and immersion) was markedly lower.

VitaVision is a higher resolution, widescreen variant of the 35 mm motion picture film format created by Paramount Pictures in 1954. This system did not use anamorphic processes but improved the quality by orienting the 35 mm negative horizontally in the camera gate and shooting onto a larger area, which yielded a finer-grained or higher-resolution image. Paramount dropped the format in 1961, but the format was later used for the first three Star Wars films and for special-effects sequences in other feature films generally.

A major theme is becoming clear, filmmakers have generally sought to produce larger and wider cinema images that when projected on the cinema screen take up more of the viewer’s field-of-view (but with good image quality, clarity, or optical resolution). In this manner, spatial immersion is enhanced. This can be achieved by using anamorphic lenses, by seeking out larger film gauges, or else by alternative optical image capture orientations that allow larger images on the negative. Of course, a larger film gauge naturally allows a larger and sharper optical image to be captured on the negative film.

70mm Films

70 mm film (or 65 mm film) is a high-resolution film gauge standard, that uses a negative area nearly 3.5 times as large as the standard 35 mm film format. As used in cameras, the film is 65 mm (2.6 in) wide, supporting wide-angle images when used with appropriate lenses. For projection, the original 65 mm film is printed on 70 mm (2.8 in) film. Some venues continue to screen 70 mm to this day.

An anamorphic lens combined with 65 mm film allows for extremely wide aspect ratios to be used while still preserving image quality/resolution. This was used in the 1959 film Ben-Hur and the 2015 film The Hateful Eight, both of which were filmed with the Ultra Panavision 70/MGM Camera 65 process at an aspect ratio of 2.76:1. The latter film required the use of a 1.25x anamorphic lens to horizontally compress the image, and a corresponding magnification reversing lens on the projector. The overall effect (in a cinema theatre) is a startling improvement in field-of-view, immersion, and image sharpness or quality.

3-D or Stereoscopic Movies

Stereoscopic cinema /or photography is a form of 3-D imaging/display that takes advantage of the human binocular system to enhance the impression of looking into a spatial reality or 3-D space/scene. So-called 3-D / stereoscopic films gives an illusion of three-dimensional depth or spatial solidity, usually with the help of special glasses worn by viewers, which employ human binocular vision.

Stereoscopic cinema has been around since 1915, but has mostly been relegated to a niche in the motion picture industry because of the costly hardware and processes needed to capture, produce, and display a 3-D film. However, 3-D films experienced a worldwide resurgence in the 1980s and 1990s driven by IMAX high-end theatres. 3-D films also became increasingly successful throughout the 2000s, peaking with the success of 3-D movies such as Avatar in December 2009.

Circle Vision 360

Circle-Vision 360° is a film format developed by The Walt Disney Company that uses several projection screens that encircle the audience, basically being a form of cylindrical perspective and as projected onto an internal cylindrical screen.

In the Circle-Vision 360°, the screens are arranged in a circle around the audience, with small gaps between the screens allowing projectors to be placed in these gaps, and above the heads of the viewers. Circle-Vision 360° cameras have been mounted on the top of automobiles, or in helicopters, for immersive perspective travelog scenes.

IMAX Cinema System

IMAX is a diverse system of high-resolution cameras, film formats, film projectors, and theatres known in particular for having very large screens with a tall aspect ratio (approximately either 1.43:1 or 1.90:1) and steep stadium seating, plus with the 1.43:1 ratio film format. However, IMAX screens are available only in a few select locations.

IMAX has two camera systems, firstly the 70mm IMAX film format, and secondly the IMAX Digital GT. Both types of camera images can be displayed on special large screens 18 by 24 metres (59 by 79 feet) which normally today use digital laser projectors. The 2023 film Oppenheimer was shot in IMAX 65mm large format film, and then transferred to IMAX 70mm cinema film, and at 3 hrs is the longest IMAX 70mm film ever produced.

The film-based IMAX 70mm projectors do have much higher resolution and clarity than the digital version of this format, and unlike most conventional film projectors, the film runs horizontally so that the image width can be greater than the width of the film stock (horizontal pulldown). It is called the 15/70 format. Associated theatres are often purpose-built and dome/spherical-shaped theatres.

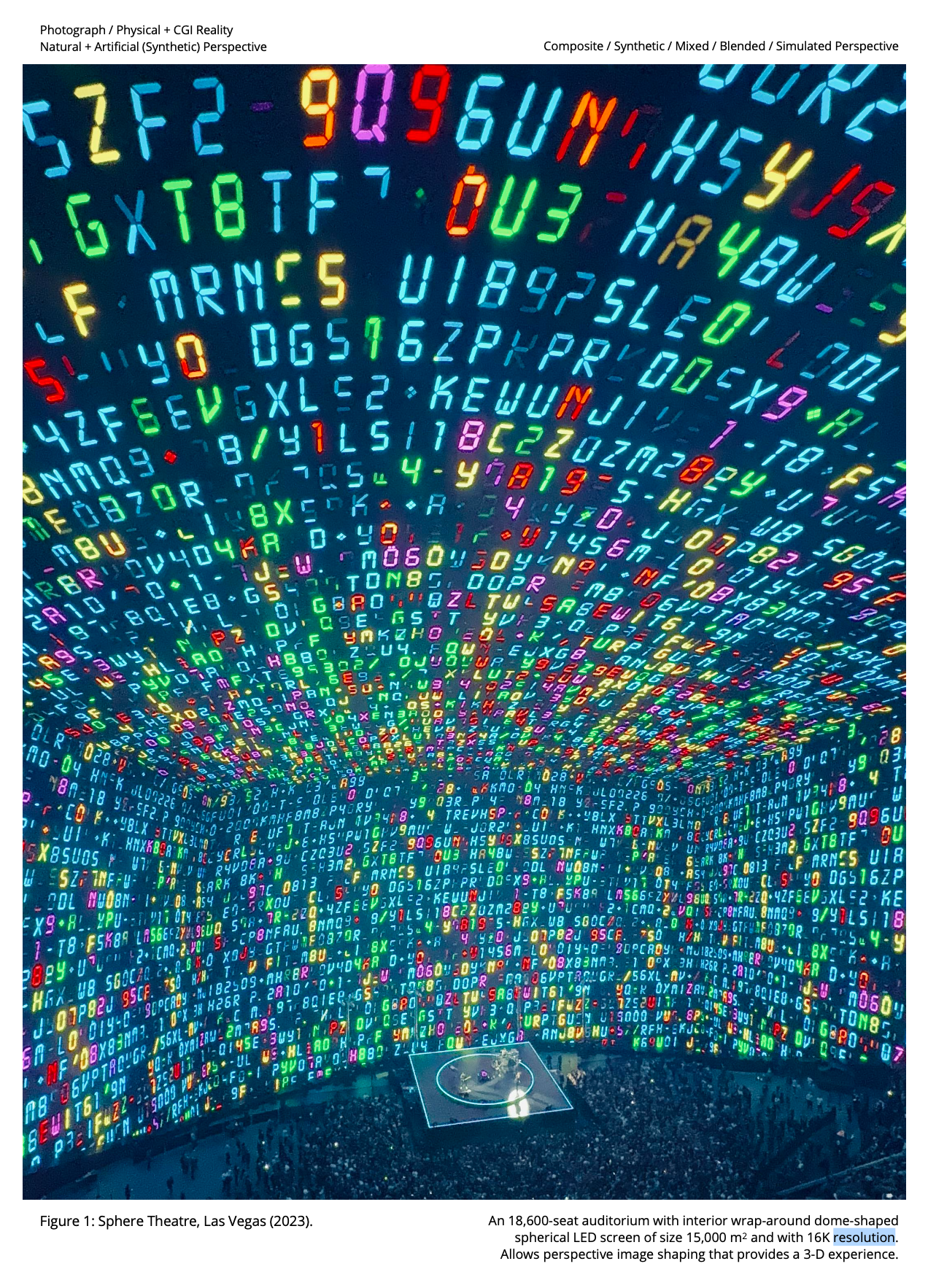

Sphere Theatre

The Sphere Theatre in Las Vegas is a new all-digital venue built in 2023, which seats 18,600 people, and includes a 16K resolution wraparound interior dome/spherical-shaped interior LED screen. The venue’s exterior also features 580,000 sq ft (54,000 m2) of LED displays. Sphere measures 366 feet (112 m) high and 516 feet (157 m) wide.

Film (or more accurately digital moving) images to be displayed on the Sphere Theatre use a special camera called the Big Sky camera with an 18K pixel sensor that measures 77.5mm x 75.6mm (3.05”by 2.98″); and the system is capable of capturing images at 120 fps and producing data at 60 gigabytes per second. The camera includes a pair of lenses (to capture content in stereoscopic 3-D) that cover this sensor resolution/size and match the size/shape and field-of-view of the screen. The curvature of the dome’s screen makes such a fisheye lens a natural fit, however, it has to resolve extraordinary detail for the 16K pixels of the screen.

The audience’s normal vision inside the Sphere places the most important part of the image in the lower quarter of the screen; because this is the most comfortable and frequent viewing angle. However, there is still a considerable image segment above the audience’s head, so when a typical scene is shot, the camera must be tilted at 55 degrees to capture an angle-of-view that fills the entire screen, with the “centre” of the action framed through the lower edge of the lens, normally a suboptimal region for a fisheye lens whose periphery yields poor image quality.

Hence for Sphere, the team had to create a special 165-degree-horizontal-angle-of-view fisheye lens with superior edge-to-edge field resolution. The result is a lens the size of a dinner plate, but with excellent sharpness and contrast reproduction right to the edges of the angle-of-view. Plus there is little chromatic aberration in the image, even to those extreme edges.

Top: External View of Theatre with LED Display, Bottom: Internal Theatre LED Screen

Image Facets

The projected forms present in a camera image depend on many optical factors. For example, opening up the aperture of a lens lets in more light to enhance the brightness and contrast of the image, but this will also reduce the depth-of-field or region of object space that the lens projects to a sharp focus. Also, very wide-angle lenses (less than 18mm focal length) tend to have a deeper depth-of-field, but sometimes at the expense of the introduction of aberrations such as curvilinear distortions, etc.

Another factor to consider is the camera integration time, or snapshot time interval, during which the shutter exposes the sensor or film to the spatial object/scene. Whereby, for fast moving objects, or to capture moving images, a relatively fast exposure time is required, for example, new digital film cameras can capture 150-600 frames per second!

Patently, there is a close and reciprocal relationship between aperture size, and captured frames-per-second, whereby opening up the lens aperture allows for a faster frame rate, and to capture the same light level at the image plane. Sensor resolution is another important factor that determines the sharpness or resolution attained in the final image, being a factor that is typically fixed for a particular configuration of camera body, sensor, and lens.

Optical Assembly

Optical assembly refers to the interrelation of a specific perspective method/system and associated scene/object structures including object space frameworks, plus camera design/form. As discussed we have different kinds of cameras, including monocular, binocular, and multi-ocular cameras depending upon the number and types of apertures employed. Of course for multi-view perspectives it may be that images from multiple cameras are combined into a single image space, and by one method or another, but as yet such systems are rare.

For monocular perspective imaging, we have three basic camera types:

- Flat or 2-D Image Plane: painting, photograph, movie

- Cylindrical shaped Image Plane: Cinerama, Circle-Vision

- Spherical shaped Image Plane (or Projection Plane): IMAX, Sphere Theatre Las Vegas.

Earlier, we explained how the spatial objects/scenes are related to perspective image forms, and also relevant image display/projection systems.

Observation Mode

Now we come to the issue of observation mode, or camera observation scenario, which relates to three observation scenarios:

- Sphere or Cylinder of Revolution Perspective: multiple station-points, single camera focus point.

- Sphere or Cylinder of Vision Perspective: single station point, multiple camera focal points.

- Arbitrary Camera Setup(s): single/roaming station point, single/roaming camera focal point.

We can link these observation modes to three basic methods of looking at the world. First, looking-in/looking-at perspective uses an eye/camera/representation to explore close-up/narrow-field perspective images of a spatial object/area (ref. moving station point on a Sphere of Revolution). Secondly, looking-out/around perspective uses an eye/camera/representation to explore distant/wide-angle perspective images of a spatial scene (ref. fixed station point on a Sphere of Vision). Finally, looking-through perspective allows seeing ‘through’ spatial scenes and/or perspective windows, or seeing ’inside’ spatial objects; using transparent perspective methods and/or with special projection displays.

Patently the captured image form will depend upon the camera type, however, it is important to realise that it is sometimes possible (for example) to capture ordinary 2-D images from multiple viewpoints and then have these same images transformed into a single image space that represents a spherical observation scenario as detailed above. This procedure can sometimes be performed using special mathematical and/or digital techniques, etc.

Panorama: Expanding the Visual Field

A fundamental feature of all perspective methods/systems/instruments relates to the concept of field-of- view (FOV), which for an outward-looking optical instrument is a measure of the proportion of the total environment (180°/360°) captured in a single perspective image taken from a particular viewpoint. Often we relate such images to the natural monocular FOV of the human eye—known to be around 135 degrees in the horizontal direction (HFOV), and 180 degrees vertically (VFOV).

Over the centuries there have been attempts to go beyond these limitations in natural vision, specifically to facilitate viewing, matching/modelling and representation of ‘captured’ physical reality. Artists have explored cylindrical and spherical surfaces on which to depict their paintings over wider FOV. In the 20th- century, photography has contributed to these developments. A whole range of lenses were produced from extreme wide angle (84°-179°), to wide angle (63°-83°), and normal (34°-62°), etc.

Wide-field developments happened on three fronts: fisheye, multi-cameras (poly-dioptric) and catadioptric cameras. In the open air, there is a maximum of 180 degrees attainable using a fisheye lens. Alternatively, through a second method of multiple cameras in the form of a dome, we can compress spatial reality to capture/represent up to 360 degrees for a total view ofthe environment. Simple versions typically entailed symmetrical numbers such 2, 4, 8 cameras leading to cylindrical panoramic images. These methods have been complemented by spherical configurations such as the Google Street View, and related cameras leading to ever more dramatic omnidirectional perspective images.

The third method lay in catadioptric lenses, which typically involve mirrors: parabolic, hyperbolic, elliptical or planar. These principles are used to capture/project omnidirectional perspective images, which can subsequently be translated into cylindrical/spherical panoramic images. In addition to the original scene’s shape (object space captured visual field) and the recorded scene’s shape (image space visual image), there is the question of the projected surface (displayed image on a display surface), which can range from a shallow/deep concave bowl, to a hemispherical or full spherical dome, which can in turn entail its interior or exterior surface.

Television had similar issues, where larger and higher-resolution screens have become a trend. On the one hand, there are ever more dramatic 8K, 10K, and even 18K resolution screens (Sphere Theatre, Las Vegas). On the other new kinds of stereoscopic screens are being introduced, where technology works to expand the ‘local’ viewing field for 3-D objects (ref. sphere of revolution perspective). In sum, panoramic perspective(s) are set to remain popular, as we seek to match/expand the natural visual field.

Holographic, Volume and LED Displays

As described above, the area which has seen the most serious applications of panoramic methods is Cinema, which have been documented in an excellent study by Oettermann (1979). This principle was applied to the world of Cinema by Disney in their Circle-Vision 360° (1955) and developed independently at a much higher resolution by the founders of IMAX for the World Fair in Montreal (1967). Spherical or 360-degree views have become increasingly popular, with domed and spherical screens. Subsets of a 360-degree view are found Paseo Lumenscapes and the Atmasfera 360 screen in Kiev. The best-known examples are IMAX theatres, and some of which encompass a large portion of a sphere.

In terms of emerging technologies, there are drones, which permit 360-degree views from the air, or complex 360-degree cinematic cameras, which lead to full 360-degree views. Effectively, each of the camera types applied the principles of perspective. Having, 8, 12, 14, 16 or 22 on-board cameras simply multiplied the principle to cover the entire field of vision. But in any case, a single drone, using environmental fly-throughs, and using multi-view and motion perspectives can provide the Cinema or Television viewer with a highly realistic sense of a 3-D space.

These examples provide visual correspondence as:

- Copying: reproducing the appearance of the world; and

- Matching: reproducing the different ways/degrees that human vision experiences the physical world.

Holographic displays similar to the princess-Lea hologram scene from Stars Wars, have been an ongoing fascination. However as yet no practical, moving-image holographic displays/TVs or cinema- systems have been produced.

Volume displays represent a key technology trend; of which Circle-Vision and IMAX were initial versions. For example, as mentioned earlier. a remarkable recent innovation is the Sphere Theatre venue in Las Vegas, which is a new type of spherical display that is 112 m high and 157 m wide at its broadest point. It includes seating for 18,600 people. Sphere’s interior is equipped with a 16K resolution wraparound LED screen, measuring 15,000 m2. It is the largest and highest-resolution LED screen in the world; and can show real-time movies and Computer-Generated Graphics, etc. This interior sphere can be used to produce remarkable perspective effects, such as production of a full dome-view/vista of the theatre outside, effectively making the theatre roof disappear!

Another trend in volume displays are LED Walls. For example, the Stagecraft Virtual Environment is an on-set virtual production visual effects technology composed of a video wall designed by Industrial Light & Magic (ILM).

On-Set Virtual Production

On-set virtual production (OSVP) is a technology for television and film production in which many LED panels are patched together to produce a large screen, which is then used as a backdrop for a filming set, and on which video or computer-generated imagery can be displayed in real-time. The use of OSVP became widespread after its use in the first season of The Mandalorian (2019), which used specialist live image processing software named the Unreal Engine, developed by Epic Games.

A large-scale LED or volume screen is sometimes called a virtual stage, and is an interesting development that enables the use of a type of blended- scene perspective and synthetic perspective in which natural perspectives from physical actors and real environmental objects are used in combination with projected moving/changing graphical / new media perspective CGI scenes whilst the moving images are being captured. This technique allows the film camera to move around the set in real-time, capturing multi-view perspectives, whilst software generates the appropriate background views in correct perspective (or with the correct viewpoint dependant geometric image shape/scale/position/angle transformations). The results are practically indistinguishable from a scene filmed with a real-world backdrop.

The outstanding success of virtual production and the associated volume screen technology, combined with live perspective transformations calculated as a background for physical actors, makes one wonder where this is going to lead us. Perhaps one day our televisions or movie theatres will surround us and we will move inside virtual reality, similar to the holodeck on the star trek TV series!

Screens and Displays

An electronic visual display (EVD) is a display device that can display images, video, or text that is transmitted electronically (normally in digital form).

EVD’s include television sets, computer monitors, and digital signage. They are ubiquitous in mobile computing such as with tablet computers, smartphones, and information appliances. Sometimes EVDs are referred to as screens or touch screens. Beginning in the early 2000s, flat-panel displays began to dominate the industry, as cathode-ray tubes (CRT) were phased out, especially for computer applications. Soon LCD and OLED displays became the dominant types of screen used for TVs, computer monitors and mobile devices such as smart-phones, etc.

A major trend is a gradual shift towards higher resolution display formats, whereby the display resolution is the number of distinct pixels in each dimension that can be displayed. Early television systems used relatively low resolution such as 480 by 240 pixels or 576 by 288 pixels. Newer computer and television displays can support 4K or 8K resolution, with 3840 by 2160 or 7680 by 4320 pixels. Patently more pixels can lead to higher spatial resolution images, and thus to improved levels of perspective resolution, so long as the camera images are captured, recorded, and transmitted to the display with sufficient resolution. Patently displayed (or represented) field-of-view also depends on the field-of-view at which images have been captured by the camera.

Curved Screens (external displays)

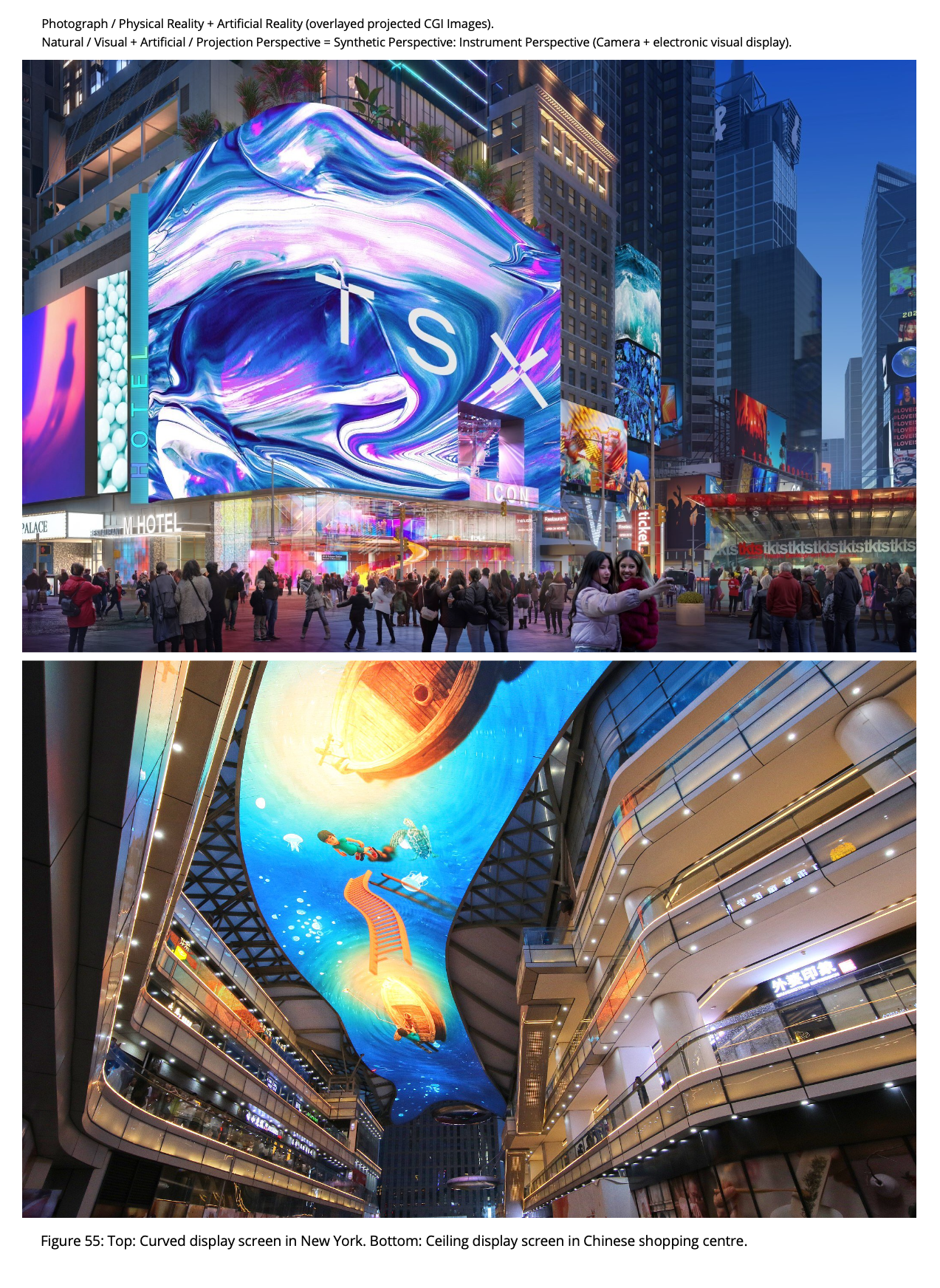

Starting in the mid-2010s, curved display panels began to be used in televisions, computer monitors, and smartphones. These started with relatively small-sized LCD, OLED and Quantum dot screen technologies, and then culminated in giant LCD screens of the types seen in modern digital billboards and the Sphere Theatre Las vegas, etc.

It is important to consider the purpose and/or capabilities of an external curved screen display as seen in Figure 9 (top image). One might consider that such a screen supports cylindrical perspective as discussed earlier; whereby multiple viewpoints and/or multiple vanishing points are supported. Indeed if a multi-view camera image, or multi-view CGI image is produced that does contain a wide-field, multi-view, and/or multiple- vanishing point perspective—then the corresponding (stitched together?) images displayed on the screen would in a way (appear to) support multiple viewing directions as is the case with cylindrical perspective.

However unless multiple cameras are used that look in different directions, prior to then projecting the views onto the correct regions of the display, as with Cinerama, then this is not a true cylindrical perspective. In any case, no such techniques are normally employed, and the curved screen simply displays a flat image that is ‘stretched’ across the display surface at a single visual scale or with the usual geometry (the display itself is a form of 3-D). No extra 3-D effect is produced as a result, for the depicted or image space at least.

However, the fact that this is a dimensional or volume display that can itself be seen from multiple directions gives a striking effect that enhances the visibility of displayed content even if not its actual 3-D perspective form (in terms of the captured/displayed image geometry). These same arguments also apply for most types of curved television and computer monitor displays, irrespective of whether they employ stereoscopic methods.

Screens (internal displays)

Starting in the early 2020s, very large format (10-20m) curved and spherical display panels began to be used in theatres and also in virtual/mixed film production environments. Here a key goal is capturing/displaying the dimensionality (3-D aspects) of a spatial scene. True multi-view artificial perspective-corrected images are often produced and then displayed on the multi-view perspective screen.

It is interesting to consider that for an artificial perspective display, there are four kinds of 3-D view:

- Uni-angular view of uni-angular image of spatial scene/object: Ordinary 2-D perspective image displayed on a surface display or computer monitor.

- Multi-angular view of uni-angular image of spatial scene/object: The term “3-D” is also used for a volumetric display which displays uni-angular images observed from multiple angles (onlooker changes direction of gaze), whereupon the images are notionally viewed in 3-D, but without experiencing image-based angular perspective changes. Only (uni-angular) perspective cues are available.

- Multi-angular view of multi-angular image of spatial scene/object.

- Omni-directional display: presents same image aspect from all directions.

The term “3-D” is also used for a volumetric display which generates content that can be viewed from multiple angles, the same being multi-angular images captured/generated by/for viewing from multiple viewing angles, whereupon the onlooker (may) experience multi-angular perspective depth cues (on a multi-directional screen canvas).

It seems likely that perspective displays of the third type will become more common in the future. Systems like the Sphere Theatre in Las Vegas are examples of this kind of 3-D spherical panorama imaging/projecting (or mixed perspective) type of technical perspective that captures/represents/projects a (partial or full) 360-degree spherical panorama for a surrounding visual scene.

And that’s about it for our review of camera and screen technology; before we wrap up a brief look at cinematography and cinematographic techniques is useful.

Cinematography

Cinematography is the art of motion picture (and more recently, electronic video camera) photography. The word cinematography comes from Ancient Greek κίνημα (kínēma) ‘movement’ and γράφειν (gráphein) ‘to write, draw, paint, etc.’.

Cinematography encompasses:

- Techniques: Use a variety of techniques to shape the audience’s visual experience, including camera angles, lighting, shot composition, focus, and special effects.

- Equipment: Use a variety of equipment, including cameras, lenses, filters, film stock, tripods, lighting, and memory cards.

- Storytelling and Creativity: Use of a combination of narrative and image montage techniques, etc., to convey a feeling or transform a mood in a short scene.

Standard Cinema Techniques

The first film cameras were fastened to the head of a tripod, with crude levelling devices provided, and thus were effectively fixed during the image-capturing or shot-taking process, hence the first camera movements were the result of mounting a camera on a moving vehicle.

Then in 1897, Robert W. Paul constructed the first rotating camera head made for a tripod. This device had the camera mounted on a vertical axis that could be rotated by a worm gear driven by turning a crank handle. Shots taken using such a “panning” head were also referred to as “panoramas” in the film catalogues of the first decade of the cinema. This invention led to the creation of panoramic photographs as well.

Camera movement can enhance the visual quality, sense of spatial depth, and impact of a film. Some aspects of camera movement are:

- Zooming: Changing the focal length of the lens to make the subject appear closer or farther away. Creates a sense of intimacy or distance from the subject.

- Tilt: Rotating the camera vertically from a fixed position. Used to show the height of a subject or to emphasise a particular element in the scene.

- Panning: Rotating the camera horizontally from a fixed position. It can be used to follow a moving subject or to show a wide view of a scene.

- Pedestal/Booming/Jibbing: Moving a camera vertically in its entirety. Used to show vertical movement relative to a subject in a frame

- Trucking: Moving a camera horizontally in its entirety. Used to show horizontal movement relative to a subject in a frame

- Rolling: Rotating a camera in its entirety in a horizontal manner.

Advanced Cinematography

Director Steven Spielberg’s films are known for their innovative camera work, visual storytelling, and use of lighting, camera angles, and movement. Spielberg is also known for his innovative use of camera movement and framing.

Some of Spielberg’s other techniques include:

- Fluid camera movement: Use of zoom shots and fluid camera movement to shift shot composition without cutting. This technique transports the viewer and gives the visuals energy.

- Camera movement in every direction: moving the camera in every direction, including tracking laterally, dollies, and cranes up and down, all in a single shot.

- Dramatic and claustrophobic shots: Dramatic and claustrophobic shots create effects that push the boundaries of classic cinematographic framing.

- Close-ups: convey actor emotions, etc.

- Tracking shots: The camera follows the actors as they move through the scene, capturing their performances from multiple angles.

- Dolly shots: Camera moves on a dolly track to follow the action within a scene.

- Motivated camera movement: The camera is part of the action, and its movement is informed by the motivations of the characters. For example, the camera might tilt downwards if a character is picking something up.

Steven Spielberg is also known for using an “L system” in his movies, which involves actors and the camera moving in an L-pattern. This can include actors turning in an L-pattern, or the camera moving in one direction while actors move in an L-pattern. Perhaps such techniques can give the viewer an improved, or more explicit, sense of space and spatial elements including scene structure and character location/movements/relationships, etc.

Conclusion

Our brief tour of camera perspective is complete. As we have seen, this category/form of perspective has a fascinating history; having played a key role in art, science, technology and culture over the last 100-120 years.

As mentioned, we have three basic ways of observing the physical world. First, looking-in/looking-at perspective, second, looking-out/around perspective, and finally, looking-through perspective. Whilst visual perspective (2nd type) provides humans with remarkably detailed, colourful, and efficient views of spatial reality, optical instruments can boost our natural capabilities in these respects. Optical cameras can enhance natural vision, by capturing more detailed, colourful, higher resolution and wide-field images, enabling flexible image capture/processing/display, rapid recording and transmitting of these images from/to remote places/times, etc.

More recently, perhaps the most innovative developments have occurred in Virtual, Augmented, Extended Reality and Artificial Intelligence; where a blending/merging of the real with the digital has occurred. Many times today it has become difficult to separate the true/optical image from the virtual image. In any case, such digital multi or metaverse trend(s) are set to continue and/or evolve. Overall, we see a blending of optical perspective methods/systems with real-time computing, remote-sensing, Internet-Of-Things, Virtual Reality, Artificial Intelligence, etc. Ergo, a new age of super-informative, all-encompassing, real/simulated, and integrated perspective views/images is gathering pace.

We humans potentially miss an infinity of sights, related to an object’s/scene’s structure/composition, because Forms are too small/large, too far, too dim, too fast, or hidden within opaque material(s); and we’re unaware of radiations beyond visible. Today, precise modern cameras attached to microscopes, telescopes, satellites, drones, strobe-lights, radiation-detectors, and computers, magnify our viusal perception/conception of the world which is undergoing a remarkable transformation as a result. Modern perspective methods/systems/instruments allow us to move far beyond the limitations of natural vision. On all scales from the submicroscopic to the cosmic, they expand our vision, revealing vanishingly faint images, invisible radiations, events imperceptibly swift or slow, remote realms of space, and landscapes/oceans which we cannot capture unaided.

In sum, modern camera perspective(s) provide an almost inconceivably powerful portal to marvellous and enlightening visual worlds! Perhaps without realising, and sooner than we imagine, we are set to explore new kinds of mind-blowing visual worlds together, as we experience the incredible images provided by a new generation of optical, digital/virtual and AI cameras.

-- < ACKNOWLEDGMENTS > --

AUTHORS (PAGE / SECTION)

Alan Stuart Radley, 16th May 2025 - author of main body of text on this page.

Kim Henry Veltman (1980 - 2017) - author of partial segments of text on this page.

---

BIBLIOGRAPHY

Radley, A.S. (2023) 'Perspective Category Theory'. Published on the Perspective Research Centre (PRC) website 2020 - 2025.

Radley, A.S. (2025) Perspective Monograph: 'The Art and Science of Optical Perspective', book series in preparation.

Radley, A.S. (2020-2025) 'The Dictionary of Perspective', book in preparation. The dictionary began as a card index system of perspective related definitions in the 1980s; before being transferred to a dBASE-3 database system on an IBM PC (1990s). Later the dictionary was made available on the web on the SUMS system (2002-2020). The current edition of the dictionary is a complete re-write of earlier editions, and is not sourced from the earlier (and now lost) editions.

Veltman, K.H. (1980) 'Ptolemy and the Origins of Linear Perspective' - Atti del convegno internazionale di studi: la prospettiva rinascimentale, Milan 1977, ed.

Marisa Dalai-Emiliani (Florence: Centro Di, 1980), pp. 403-407.

Veltman, K.H. (1992) 'Perspective and the Scope of Optics' - unpublished.

Veltman, K.H. (2017) 'Perspective from Antiquity to the Present' - unpublished.

Veltman, K.H. (1994) 'The Sources of Perspective' - published as an online book (no images). Later published with images as 'The Encyclopaedia of Perspective' - Volumes 1, 2 - (2020) by Alan Stuart Radley at the Perspective Research Centre.

Veltman, K.H. (1994) 'The Literature of Perspective' - published as an online book (no images). Later published with images as 'The Encyclopaedia of Perspective' - Volumes 3, 4 - (2020) by Alan Stuart Radley at the Perspective Research Centre.

Veltman, K.H. (1980s-2020) 'The Bibliography of Perspective' - began as a card index system in the 1980s; before being transferred to a dBASE-3 database system on an IBM PC (1990s). Later the bibliography was made available on the web on the SUMS system (2002-2020). In 2020 the Bibliography of Perspective was published as part of'The Encyclopaedia of Perspective' - Volumes 6, 7, 8 - by Alan Stuart Radley at the Perspective Research Centre.

---

Copyright © 2020-25 Alan Stuart Radley.

All rights are reserved.

You must be logged in to post a comment.