This section provides a detailed conceptual ontology or taxonomy for visual/optical/technical perspective.

Our goal is to simplify and clarify the subject of perspective, the same beset by numerous complex and often interrelated optical processes that hide the true nature of perspective. Despite progress, much remains to be done to establish perspective as a primary branch of knowledge—or a new field of study.

In particular, we must identify, gather and integrate a vast number of perspective facts, principles, types/forms, methods, systems, and phenomena. Henceforth, we are building an accurate taxonomy to catalogue all classes/branches of perspective—a monumental task that has not been previously performed.

Complexity of Subject Matter

Earlier we identified six basic kinds of visual/optical/technical perspective categories (systems/methods); namely natural (including environmental perspective and visual or retinal perspective), mathematical, graphical, instrument, simulated and new media categories.

Unfortunately, the complexity of visual/optical perspective (as a subject) increases significantly when we expand out the developing taxonomy from these basic categories; whereby we have identified 1,200 types/forms of optical perspective as detailed in our Dictionary of Perspective. And this subject complexity increases further when we consider that the different types of perspective can combine, interrelate, and in various ways; plus with a host of associated facets.

The question arises as to how it is possible to manage this complexity. Our approach has been to separate the subject of perspective into types, atomic facts, axioms, base principles, etc. And thus to identify and map the general categories, forms, processes, and phenomena of perspective and their interrelationships, etc. Overall, we seek to develop a new perspective category theory that reflects real-world objects/events as closely as possible.

Perspective Types

To begin, we identify the basic types of optical perspective; where type can refer to perspective category or form (geometrical image form), depending upon context.

- Optical perspective <IMAGING + PROJECTING CLASSES> is concerned with recording <CAPTURING>, presenting <DISPLAYING>, and interpreting <DECODING> visual images of, or projecting <PROJECTING> images/beams into, a three-dimensional space (e.g. the physical world). It encompasses all forms of perspective that use, or purport to use, light, or electromagnetic radiation (ref. real, imaginary, or simulated light-rays, etc), to capture/project visual aspect(s) of a spatial scene; including all wavelength ranges from gamma-rays, x- rays, visual-spectrum, microwaves, radio, etc.

- Visual perspective <IMAGING CLASS> (of the first type, or not primarily related to the human visual system) is when a visual image is used to view, match, represent, create an illusion of, or an immersion into, the visual appearance of a spatial object/scene.

- Visual perspective <IMAGING CLASS> (of the second type, retinal perspective or the human visual system), sometimes called ‘true’ perspective, applies when a human views a spatial scene/object in the real world (unaided eyesight).

- Natural perspective: includes visual perspective (second type or retinal perspective) and environmental perspective or the optics of natural scenes or physical reality.

- Artificial perspective: any class of perspective involving instantiation of principles/methods or instruments/systems of human origin. The artificial perspective category includes mathematical, graphical, instrument, simulated and new media types.

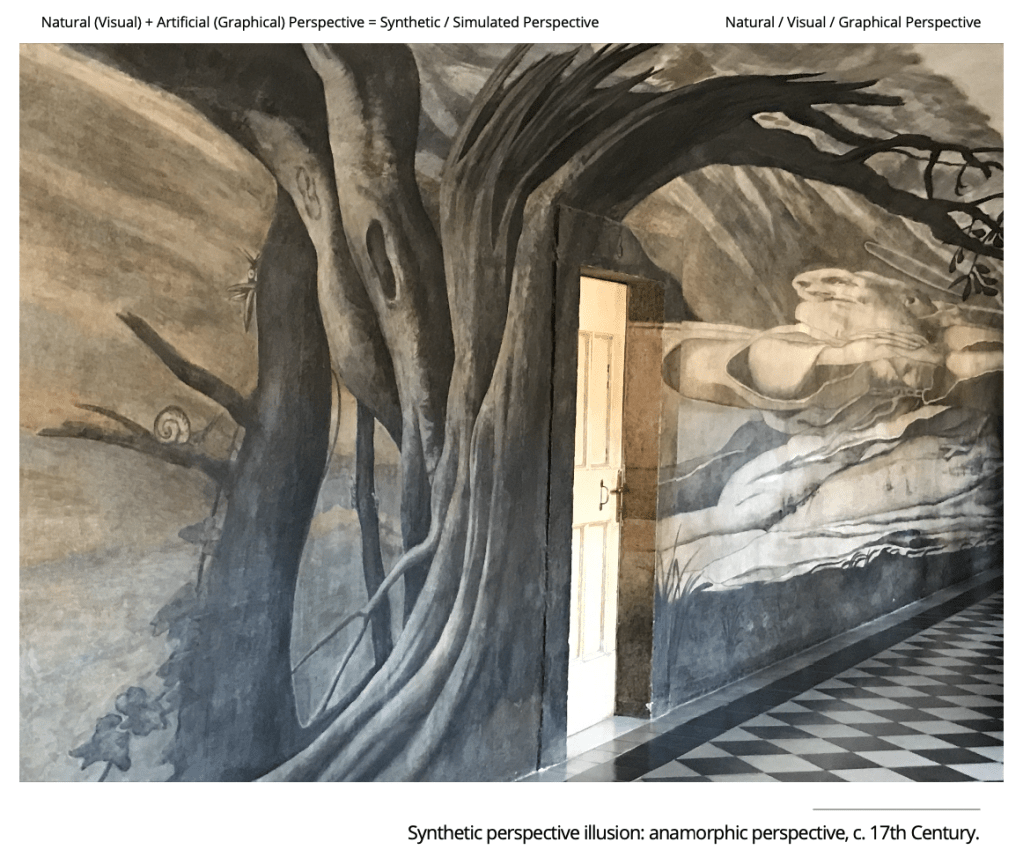

- Synthetic perspective: any systematic or accidental combination of natural and artificial perspective. According to a broad definition of synthetic perspective, many perspective categories/methods/images fall under this class; because capture and viewing of optical images (from physical reality) inherently involves the interaction of natural and artificial classes; for example when using human eyesight (natural perspective) to view photographic images (artificial perspective) of physical reality (environmental perspective). Normally all types of optical views/images fall under the synthetic moniker, including 1-D, 2-D, and 3-D optical image types. Ergo, included in this (very general) definition are perspective images of physical reality produced by any kind of perspective instrument or new media system, etc.

- Simulated or forced perspective: refers to designed <FALSE> illusive/immersive images/views of spatial reality, by visual illusion or optical adjustment of physical reality, or by representation of a false spatial reality (distorted/transposed scene geometry). Included in this definition are all perspective methods that produce the illusion of a ‘false’ 3-D space, including AR/VR, stereoscopic displays, forced, and accelerated perspective, etc.

- Accelerated perspective (simulated/forced type): a category of simulated and (possibly) synthetic perspective that employs the technique of forced perspective (false perspective) to increase the perspective recession, or increase the converging angle of lines (converging appearance of a set of object space parallel lines) directed towards vanishing points, and so to increase the apparent depth.

- Decelerated perspective (simulated/forced type): a category of simulated and (possibly) synthetic perspective that employs the technique of forced perspective (false perspective) to decrease apparent perspective recession, or decrease the angle of vanishing lines directed towards vanishing points, and thus to decrease apparent depth of the optical scene.

- Imaging or a captured perspective: refers to the formation of an image or representation ‘backwards’ from a spatial reality (ref. visual perspective (retinal) or camera perspective, etc).

- Projected perspective Image/beam: refers to sending images ‘forwards’ into a physical reality, or projecting light-pencils/shadows onto physical objects.

- Mixed perspective: refers to the combination of imaging and projection perspective; for example cinema perspective and/or Virtual Reality, Augmented Reality and Extended Reality perspective types.

- Composite perspective, or category chaining perspective: refers to a perspective system comprised of more than one category or system of perspective.

- Real perspective image/view: refers to a perspective image formed directly from optical imaging of a spatial scene/object present in physical reality: <OPTCIAL IMAGING PROCESS>.

- Represented image/view: refers to a perspective image NOT formed directly from optical imaging of a spatial scene/object present in physical reality, and such an image may be (for example) a sketched or graphical copy made by human hand: <MATHEMATICAL MODELLING>, <GRAPHICAL MODELLING>, <COMPUTER MODELLING>, <OPTICAL MODELLING>.

- Combined perspective image/view: refers to any combination of a real and represented perspective image(s)/view(s) [image contains both types of image/view overlaid (one on top of the other) or arranged side-by-side].

- Ordinary or single scene perspective <SCENE TYPE A> refers to any perspective image of a homogenous object space (single scale space).

- Blended or multi-scene/double-scene perspective <SCENE TYPE B> refers to any perspective image of a combined object space, with multiple spatial geometries or scale-spaces.

- Solitary perspective refers to a single perspective image of a single spatial scene/object (directly along line-of-sight).

- Manifold perspective or image-overlay perspective refers to multiple perspective images of multiple spatial scenes/objects (directly along line-of-sight), for example looking through a window at both your own reflection and simultaneously what lies behind the windowpane.

- Simple perspective refers to a perspective image of an object/scene that is symmetrical about its centre line.

- Complex perspective refers to a perspective image of an object/scene that is NOT symmetrical about its centre line.

- Regular perspective: refers to a a perspective image of an object/scene that involves regular shapes/Forms, typically comprised of straight lines, regularly curved lines, flat planes, squares, circles, rectangles, regular solids, etc.

- Irregular perspective: refers to a perspective image of an object/scene that involves highly irregular or complex / random shapes/Forms, the same typically without identifiable order and with no straight lines, regularly curved lines, planes, squares, circles, rectangles, regular solids, etc.

We have only listed a small sample of the 1,200 types of perspective identified in the Dictionary of Perspective. Many other types of perspective are known including several multi-view perspective categories, different kinds of projection categories, mirror and reflection perspective types, lighting and shadow perspectives, motion/cinema perspectives, transparent perspective types, perspective phenomena, optical assembly classes, observation modes, camera shot types, image linkages, etc.

Once again, it is important to remember that our analysis of the field of visual/optical/technical perspective is at an early stage of development, accordingly the taxonomy shown is subject to change as the theory develops. In particular, a key requirement is to formalise our taxonomy using standard ontological concepts (e.g. class, instance, process, inheritance and relationship types, etc). Remember that whilst the ontology, taxonomy, and associated theory appears quite complex, it can clarify the nature of perspective events/processes that are operating, or will operate, in a practical situation. Furthermore, it can help characterise the imaging phenomena, etc, that relate to said imaging scenario.

In any case, Figure 1 shows a top-level ontology for optical/technical perspective, whereby most of the types shown are explained throughout the current website and related publications.

And that’s about it for our summary of the basic categories of optical perspective; which are identified as top-level processes that give rise to perspective images and associated perspective phenomena.

System Categories and Image Forms

If we count the perspective categories identified above, then we have about 30 distinct categories. But earlier, we claimed to know about 1,200 types as identified in our Dictionary of Perspective! So where are these other kinds of perspective and how can we find or classify them using our developing category theory?

First, remember that we separated perspective into:

- Perspective System Categories: perspective systems (optical processes)

- Perspective Image Forms: geometrical, optical, media image forms (perspective image outcomes)

So the upshot is that we can have many perspective sub-categories that fall under the basic category headings already identified, plus we can have many different perspective image forms that fall under the three types: geometrical image form, optical image form, and media image form (container media). Notably, we can have different combinations of these various categories/forms, giving rise to new category/form types.

Do not worry if this explanation has not clarified matters yet, hopefully, all will become clear as our explanation develops over coming pages/sections!

We start by considering the nature of a natural perspective process, whereby we use a perspective system to capture an image that is projected backwards from spatial reality, a procedure that takes place by means of the optical image chain.

Optical Image Chain

The optical imaging chain is a series of things/processes involved in image formation, capture, display, processing, analysis and storage/transmission.

The elements of the optical imaging chain include:

- Object: The object being imaged (in object space)

- Imager: The device that images (or purports to image) the object, such as an eye/camera

- Sensor: The device that converts the energy into an image (often included in imager conception)

- Image: The perspective image (in image space)

- Processor: A device that processes the image data

- Display: A device that displays the image

- Analysis: A process of analysing the image

- Storage/transmission: A process of storing the image

At this point it is useful to introduce a new term related to perspective image production; being the directed space containing the object of attention of a perspective optical imaging system, and named the target space.

Target Space

Since a perspective view/image is normally the product of several identified kinds of perspective system categories, natural and artificial, being fundamentally a synthetic process, we can develop a general method to unpack how a perspective image is formed and precisely what it consists of.

At this stage, we introduce the notion of a target space, whereby this is the original object space that the perspective system as a whole is directed towards and upon which the perspective images are a direct or indirect representation in the final image space that exists at the end of the optical image chain. Let us now consider some examples to understand the concept of target space. Whereby, it is important to recognise that only in rare circumstances can a perspective system form a perspective image alone or without engaging with at least one other category!

Firstly, when looking at physical reality using your eyes; then the target space is natural/environmental/physical reality and is a particular region of 3-D physical space. In this case the image space is retinal or visual space. Now if you use a camera to capture a 2-D photograph (image space) of that same region then the target space is still natural/environmental/physical, as when you take a 2-D movie or film of the region and display it for viewing on a 2-D television screen. However, in the latter cases, the image space is instrument or photographic/televisual space, at least before a human looks at the photograph/television to transpose said image into visual space.

In both cases, the 3-D target space is a region of 3-D physical space, and is natural or contained inside, or consists of, physical reality—whereby this is the object space that contains the spatial objects/scene under investigation. We may have intermediate representations of this target space, but they are just mere representations, but these do form part of the optical image chain. For example, a camera generates a particular kind of 2-D instrument or image space (a kind of secondary geometry) that is directed towards the same 3-D physical target space in a specific optical projection sense, but with only a partially correct translation due to image distortion and perspective phenomena effects in mapping from 3-D to 2-D space.

If we consider a painting (2-D image space) of the same region of physical space, then now the target space is still the 3-D region of physical/natural space. However, if we consider that the painting is of a wholly imaginary or fictional scene then the target space is a 3-D imaginary space, and possibly has real and/or imaginary properties accordingly.

What about mixed perspective, for example when we have a 2-D cartoon movie displayed on a cinema screen? Well in that case we can say that the final object, or end of the perspective image chain, is a target space that is a 2-D imaginary space that is artificial. However note that the cinema viewer looks through a kind of intermediate target space (instrument projection space), being the real physical object/cinema space right up to the screen, which is itself a natural perspective object space, and refers to the enveloping target space of the human visual system (visual perspective), through and into which, the layered spatial scene(s)/object(s) is/are observed.

Put simply, the purpose of the concept of target space (and the associated optical image chain) is to facilitate identification, registration, and mapping, of the journey of the image from object to image space. In other words, to be able to understand how any particular perspective image has been formed, we must be able to unpack all of the perspective ‘transformation’ processes involved, which includes identification and modelling of intermediate perspective spaces and also corresponding intermediate perspective categories/methods/principles!

Armed with the new concept(s) of target and intermediate perspective spaces and processes; we are now able to analyse the aforementioned perspective sub-categories and sub-forms, plus associated sources, properties, outcomes, etc. In any case, we have many new kinds of perspective categories/forms that are possible, for example, 1-D, 2-D, 3-D object dimensions, with associated 1-D, 2-D and 3-D image space dimensions, etc.

But first, it is useful to consider how and why different kinds of linear perspective arise, and become possible; and because this helps us to clarify how the concepts of perspective category and image form, can have either physical, virtual or media embodiments.

Kinds of Linear Perspective

Linear perspective provides a linear structure for the depiction on a surface of the apparent shape, size, and relative position of the objects constituting a spatial scene in three dimensions; that is for the representation of form (often on a 2-D surface) or what is sometimes called the representation of space.

On reflection, and on the back of these arguments, we can state there are (at least) 4 kinds of linear perspective:

- Graphical/Artificial Linear Perspective Representation (old media embodiment): when we construct a drawing of a spatial object/scene according to 1-2-3 point perspective.

- Visual/Natural/Optical Linear Perspective Representation (optical media embodiment): when we look at or capture with an optical instrument, a physical object/scene with a particular type of object space framework such as a metric grid, which is then projected according to the appearance rules of 1-2-3 point perspective (for example).

- Virtual Constructed Linear Perspective (new media embodiment): when we use a special kind of optical system, to project a spatial scene/object according to the appearance rules of 1-2-3 point perspective (Virtual / Extended Reality for example).

- Physical Constructed Linear Perspective (physical media embodiment): when we use a special kind of simulated, forced perspective to change the physical environment according to the appearance rules of 1-2-3 point perspective (for example construction of accelerated perspective to enhance the rate of recession of the appearance of parallel lines).

At the beginning of our exposition, we defined perspective as the formation of an image or view of a spatial reality; the same being the so-called third-dimension or 3-D, or 3-D, which refers to the third spacial dimension (depth) as explained below.

Nature and Meaning of 3-D

So far we have only considered perspective categories with a 2-D image plane or 2-D sensors, but there are several ways to capture and project images of spatial reality. As explained, so-called 3-D refers to the third spacial dimension (depth), but can have subtly different meanings as explained below.

In terms of natural perspective (physical reality), there are two basic kinds of 3-D:

- 3-D object space (space itself or structures contained therein). A 1-D/2-D/3-D structure in a 3-D object space (spatial scene/object).

- Viewing a 3-D or spatial scene using eyes (often using binocular vision). A view of a spatial scene/object using eyes [visual perspective (2nd type)], or looking at physical space with an optical instrument (amplified vision).

In artificial perspective <IMAGING CLASS>, there are five kinds of 3-D representation:

- Uni-angular, monocular representation of spatial scene/object. A 1-D/2-D/3-D image/view/measurement/ calculation of a spatial scene/object captured from a single viewing angle. Viewing a 2-D linear perspective image of spatial scene/object is one example, captured from a fixed viewing angle.

- Uni-angular, binocular representation of spatial scene/object. A 1-D/2-D/3-D image/view/measurement/ calculation of a spatial scene/object captured from a fixed central viewing angle, but using a binocular method (twin apertures looking at very slightly different viewing angles). Viewing a stereoscopic image of spatial scene/ object is one example, employing monocular perspective depth cues, and certain binocular depth cues.

- Multi-angular, monocular or binocular representation of spatial scene/object. A 1-D/2-D/3-D image/view/measurement/calculation of a spatial scene/object captured from multiple viewing angles, using either a monocular or binocular method. Viewing a hologram image is an example of multi-angular binocular 3-D, employing depth cues such as monocular perspective, focussing plus (partial) natural scaling and variable resolution effects, binocular vergence and parallax, plus 3-D shape changes due to multiple viewing angles, etc.

- Unlimited-angle representation of spatial scene/ object (monocular or binocular). A 1-D/2-D/3-D image/ view/measurement/calculation/model of a spatial scene/ object captured/modelled from (ostensibly) every viewing angle, using either a monocular or binocular method. Viewing/exploring a digital model (computer modelling), or a Virtual Reality world, is an example of either unlimited viewing-angle monocular or binocular 3- D. With binocular 3-D, employed can be depth cues such as monocular perspective (looking out/around plus looking in/at), possibly focussing, and (fully) natural scaling, and variable resolution effects (zooming perspective), binocular vergence and parallax, plus 3-D shape changes due multiple viewing angles, etc.

- Omnidirectional representation of 2-D scene/object (monocular) or 3-D scene/object (binocular). For example, a type of 3-D perspective that enables a flat 2-D image to project the same aspect or geometry from omnidirectional viewpoints. See Andotrope, Zoetrope, and 3-D/2-D Perspective.

Patently the kind of 3-D captured, and projected/ displayed, depends primarily on the detector or camera type, plus sometimes on the display screen employed as well.

Camera Assembly / Type

Optical assembly refers to the interrelation of a specific perspective method/system and associated scene/object structures including object space frameworks, plus camera design/form. As discussed we have different kinds of cameras, including monocular, binocular, and multi-ocular cameras depending upon the number and types of apertures employed. Of course, for multi-view perspectives, it may be that images from multiple cameras are combined into a single image space, and by one method or another, but as yet such systems are rare.

For monocular perspective imaging we have three basic camera types:

- Flat or 2-D Image Plane: painting, photograph, movie 2. Cylindrical Image Plane: Cinerama, Circle-Vision

- Spherical Image Plane: Sphere Theatre Las Vegas.

Earlier, we explained how the captured images are related to perspective image forms, and also relevant image display/projection systems.

Observation Mode

Now we come to the issue of observation mode, or camera observation scenario, which relates to three observation scenarios:

- Sphere or Cylinder of Revolution Perspective: multiple station-points, single camera focus point.

- Sphere or Cylinder of Vision Perspective: single station point, multiple camera focal points.

- Arbitrary Camera Setup(s): single/roaming station point, single/roaming camera focal point.

Patently captured image form will depend upon the camera type, however, it is important to realise that it is sometimes possible (for example) to capture ordinary 2-D images from multiple viewpoints and then have these same images transformed into a single image space that represents a spherical observation scenario as detailed above. This procedure can sometimes be performed using special mathematical and/or digital techniques, etc.

We shall now examine the design of 3-D displays.

3-D Display – Design

A surface 3-D display device, or other type of optical/volume 3-D display, may be capable of conveying depth to the viewer by application of one or more depth cues, often using film or digital media based images.

There are at least five basic kinds of 3-D surface displays used for binocular vision:

- Stereoscopic surface display (uni-angular, multi-angular, VR/AR [plus BOOM]): Stereoscopic displays produce a 3-D effect using stereopsis, but can cause eye strain and visual fatigue. Stereoscopic 3-D displays are common in VR / AR. Also a BOOM AR overlays a digital universe onto the physical universe. Holograms are intrinsically stereoscopic (no eye-strain).

- Light field 3-D surface display (mostly uni-angular type): A light field display produces a realistic 3-D effect by combining uni-angular stereopsis and accurate focussing depth cues for the displayed content.

- Lenticular auto-stereoscopic 3-D surface display (uni-angular parallel type): A lenticular 3-D display produces a parallax type of 3-D stereoscopic image/view.

- Holographic 3-D surface display (multi-angular type): A holographic display produces a more realistic 3-D effect using interactive holograms (holographic images of motion type), and by combining multi-angular stereopsis and accurate focussing depth cues, moving station-point, variable resolution effects (zooming), binocular vergence and parallax, plus 3-D shape changes due multiple viewing angles for the displayed content. At the time of writing, no widely available holographic displays have been invented or have become available for widespread use (not including mirror images).

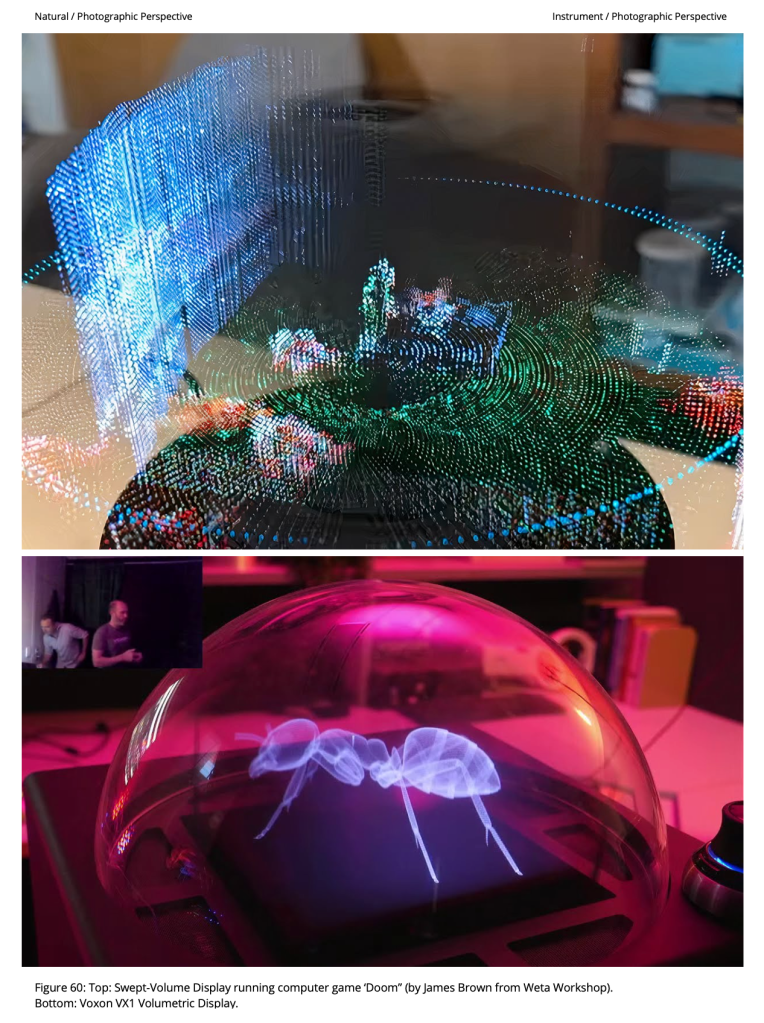

- Swept Plane 3-D Display: a structure from motion technique creating the optical illusion of a volume of light, due to the persistence of vision property of human visual perception. The principle is to have a 2-D lighted surface sweep in a circle, creating a volume. The image on the 2-D surface changes as the surface rotates. The lighted surface needs to be semi-translucent.

- Virtual 3-D Display (projected field-of-view): generation of 3-D display using VR headset.

We can also classify surface displays in terms of distance from the observer’s eye:

- Distance of 3-D display: A: Near-eye, B: Distant as in TV or theatre screen: 3-D displays can be: near-eye displays as in VR headsets, or they can be further away from the eyes like a 3-D-enabled mobile device or 3-D TV, or a 3-D movie theatre.

We can also classify the displays in terms of display/image form:

- Physical screen 3-D display: C: Flat, D: Volumetric (curved / spherical): Notably, the term “3-D display” can also be used to refer to a volumetric display which may generate content that can be viewed from multiple angles (volume screens, etc).

- Real-space image 3-D display: E: Hologram, F: Reflection hologram, G: Other

Still other kinds of displays are possible, for example, projection onto retina, and andotrope, etc. See: 3-D, 3-D perspective (1, 2, 3, 4), volume display, andotrope, fan-hologram, BOOM, visual perspective.

3-D Display – Views

For an artificial perspective image display system, there are three kinds of 3-D view:

- Uni-angular view of uni-angular image of spatial scene/object: Ordinary 2-D perspective image displayed on a surface display or computer monitor.

- Multi-angular view of uni-angular image of spatial scene/object: The term “3-D” is also used for a volumetric display which displays uni-angular images observed from multiple angles (onlooker changes the direction of gaze), whereupon the images are notionally viewed in 3-D, but without experiencing image-based angular perspective changes. Only (uni-angular) perspective cues are available. Example: flat or 2-D images viewed/present in a 3-D space.

- Multi-angular view of multi-angular image of spatial scene/object: The term “3-D” is also used for a volumetric display which generates content that can be viewed from multiple angles, the same being multi-angular images captured/generated by/for viewing from multiple viewing angles, whereupon the onlooker (may) experience multi-angular perspective depth cues (on directional screen canvas). Example: spatial or 3-D images/views that are viewed/present in a 3-D space and that are presented/viewed from multiple or changing viewpoints (or viewing angles).

- Omnidirectional representation of 2-D scene/object (monocular): A type of 3-D perspective that enables a flat 2-D image to project the same aspect or geometry from omnidirectional viewpoints. See Andotrope, Zoetrope, and 3-D/2-D Perspective.

In any case, we have come to the end of our brief analysis of 3-D, 3-D capture methods, 3-D displays, etc. Evidently, this is a complex, highly technical, and fast-developing topic having perspective principles/methods/theory firmly routed in all innovative developments.

Once again we can return to perspective types or categories/forms to understand how 3-D perspective views/images can be captured/produced and presented/displayed in any way whatsoever.

Single, Composite and Mixed Perspective

Single perspective is when only one category of perspective is involved in forming a perspective image/view. But this is a rare eventuality, since normally we have at least two categories involved, as when we use visual perspective (or retinal perspective) to view a natural environmental perspective scene. However arguably, if we ignore the target environmental perspective space, then we could say that such a situation is an example of single perspective.

Perspective is a set of image transformation processes, whereby the perspective image chain consists of either a single perspective category of perspective system or many as in composite perspective when more than one (artificial/synthetic) category is involved simultaneously to produce a perspective view/image.

Visual/optical/technical perspective can be separated into two classes: firstly the imaging perspective class: capturing/forming an image/view, measurement, representation, or illusion, of a spatial reality (3-D); and secondly, the projecting class: projecting visual images, light-beams/pencils and/or object shadows into physical reality.

However, we can have a third class, mixed perspective that refers to a combination of imaging and projecting classes; as can happen with cinema projectors, mirrors, virtual reality, projection mapping, drone and light imaging displays, etc.

Ordinary and Blended (Double) Perspective

Object space refers to the target space of a perspective system, through and into which, the spatial scene/object(s) is/are observed. In classical and ordinary perspective, with optical situations operating in the local environment (ref. sans gravitational and relativistic effects that bend space or light ray paths), the object space is Euclidean, infinite, isotropic, three-dimensional, homogeneous, and kinaesthetic. Object space is identical to so-called metric, isotopic, and/or surveyor’s space, and consists of 1-D, 2-D, or 3-D object/scene structures in a 3-D spatial reality.

We have two types of spatial class; ordinary and blended perspective.

Ordinary scene perspective is an image/view of a spatial scene that comprises a single scene, or is comprised (optically and truly) of a homogenous geometry, or geometrically unified spatial scene, being a spatial scene that is not composite (i.e. not synthetic) being geometrically unified (from all viewpoints) and consisting of a single-scale space. This is the normal type of perspective that we experience when looking at the natural environment or looking at most photographs, paintings, movies, etc.

Blended scene perspective, or double/multi-scene perspective, is an image/view of a spatial scene that comprises two or more separate (and differently scaled/structured) spacial geometries; whereby the resultant scene appears to (falsely) consist of a homogenous spatial geometry (single-scale space); however, in reality, two or more geometries have been combined (often seen as such from only a single viewpoint) to appear as a fully united and integrated spatial geometry.

Another type is blended image perspective, or multi-view perspective, is defined as several images/views of a spatial scene blended or merged into a single image-space.

Regular and Irregular Perspective

A perspective framework refers to an object space containing regular physical or geometric structures and/or metric-grids, sets of parallel and orthogonal lines, etc. Said structures, when observed/imaged, are named as regular perspective (regular shapes) as opposed to irregular perspective or irregular shaped object forms.

Useful understanding or decoding of a perspective image, involves correctly interpreting image projected shape/size distortions. This is where a method such as linear perspective comes into its own; whereby (often) we employ known perspective framework structures, including known ground-plane geometry, upright picture-plane, plus parallel lines and/or a metric grid etc. Such structures enable humans to accurately decode the perspective image in geometric terms. Not every object space contains such structures, but many artistic/photographic linear perspective images reflect spaces that do contain (at least partial) framework structures.

Real, Represented and Combined Perspective

Image space refers to an apparent visual/optical space formed/generated by a perspective system of one kind or another. In a classical perspective image (ref. spacial recession form such as linear perspective) said viewed/represented space is non-euclidean, anisotropic, inhomogeneous, and three-dimensional (ostensibly). Still, sometimes it can be a true 3-D space as with stereoscopic/immersive methods such as a virtual reality system, etc.

However, often said image is formed onto a planar or flat picture plane and is, therefore, in reality, a two-dimensional image space that forms an apparent or false 3-D imaginary/illusive space. In the case of monocular visual perspective (2nd type), the perspective image is formed onto a spherical retina that is largely a two-dimensional recording surface, and is, therefore, ostensibly a two-dimensional image space that again forms a type of false 3-D apparent/imaginary or illusive space in the case of visual or retinal perspective.

A combined perspective (multi-view type), is any view/image that contains both real and represented views which are overlaid but visually separated. An example might be looking through a gun sight; whereby you see a real perspective view of the outside world, and simultaneously superimposed a represented sighting and/or optical scaling overlay that works in combination with the aforementioned view.

Another type of combined perspective (multi-scene type) is a class of synthetic and blended scene perspective, or an image/view that combines natural and artificially modified spatial scene element(s). This is any perspective image formed of a synthetically unified spatial scene, being a spatial scene that is composite or optically unified (typically seen from a narrow range of viewpoints) and thus only apparently consisting of a single-scale space. Requires use of simulated/forced/accelerated perspective methods and similar illusive techniques, to create the illusion of a single-scale space.

Blended, or double perspective is a combined perspective that can produce apparently hidden or invisible regions of space for objects to hide within or be rendered invisible, and/or regions of multi-scale space similar to the Ames room illusion.

Optical Perspective Illusions

Perspective techniques are sometimes used to create visual illusions. Typically, a perspective illusion makes false impressions of size, depth, position, place (immersion), or transparency for objects/people. One example is when dimensionality is adjusted within a scene, making an object appear farther away, closer, larger, or smaller than it is.

The six types of optical perspective illusions are:

- Visual Perspective Illusion: illusion by perceptually adjusted appearance (false direct view of physical reality);

- Graphical Perspective Illusion (includes perspective drawings/paintings): illusion by graphically constructed appearance (false apparent view of spatial scene);

- Instrument Perspective Illusion: illusion by secondary visual images (VR/AR, holograms, etc), and/or projected appearance (false formed view of 3-D scene);

- Forced Perspective Illusion: illusion by physical construction of a false physical reality (apparent), or by the representation of a false physical reality (distorted/transposed scene geometry with apparent illusive effects).

- Transparent perspective Illusion: illusion that forms a transparent, see-through, or multi-layer views/images of a three-dimensional object/scene. Whereby there are two basic types of transparent perspective. Firstly, we have projection of transparent views/images of three-dimensional objects/scenes onto a computer display. A second type is similar to ‘mixed reality’ techniques such as spatial augmented reality. In this latter technique, 3-D transparent and multi-layer views of an object are projected into the physical environment stereoscopically through a Virtual Reality or Augmented Reality headset (for example).

- Mirror/Hybrid Illusions: Mirror perspective is a unique instrument perspective that can form amazing optical effects commonly employed in magic tricks, stage illusions, etc. For example, clever mirror arrangements can produce unusual perspective-related: image fictions, ambiguities, distortions, and paradoxes.

Previously, we explained in detail, the six basic types of optical perspective illusions; but we have not given many concrete examples; however in the next section we describe a new form of optical perspective illusion: the Androtrope display.

Andotrope Display

An andotrope is a new type of modern zoetrope or omni-directional display invented by Mike Andos, whereby he mounts two IPhone displays back to back each showing an identical video that is synchronised in time (playing the same video sections at identical times). Next, he mounts the phones vertically and also mounts an obscuring tube over both phones, but the tube has two vertical slits (each operating as an optical limiter) and each one mounted directly in-front of the separate phone screens.

Next, we simply spin the device, and the whole cylinder rotates at high speed (up to 1200 RPM, which gives an effective 40 frames per second moving image). Both tablets synchronise their output, effectively doubling the frame-rate by displaying two images per rotation. As the mechanism rotates, your view through the red slits sweeps across the displays, which ensures everyone in all directions ends up with a view of the full screen.

The result is a flat or 2-D moving image that can be seen in the same aspect or appears from an identical fixed viewing direction, but as seen from omnidirectional viewpoints or multiple station points, that is the 2-D image looks identical in perspective projectional terms from a full 360 degree point-of-view. See Figure 3 for a photograph of a working Andotrope display.

This device uses a principle similar to the persistence of vision effect whereby you perceive a single stable but moving image. You can say that this is a type of 3-D display that presents an unchanged 2-D image perspective projection from all directions. Another way of describing such a 3-D/2-D perspective is that it produces 360-degree Identical views of a flat or 2-D object or scene. An Andotrope provides an omnidirectional, bill-boarding holographic video to multiple simultaneous viewers in all directions.

Inside and Outside of the Image

A useful way of thinking about the practical use of perspective images/views is to recognise two basic ways that humans engage with images: whereby we are either ‘inside’ or ‘outside’ the image!

What we are referring to is the degree of immersion into the image-space, because we are either on the outside of an image looking in/at, or present inside the image/object space looking around and about. Patently when it comes to natural/visual perspective we are inside physical reality, and hence inside object space, but in a real sense we are inside the image space itself because we can look about in different directions to create our viewpoints.

Whereby we can perform two basic perspective-related visual operations. Firstly, we have sphere-of-revolution perspective, rotating about or orbiting the object of interest, which involves looking at a stationary object from different points of view. Secondly, we can observe the world around us using a sphere-of-vision perspective where we look around ourselves in different directions, but from a stationary point of view. The latter approach can involve looking within a ‘frontal’ hemisphere of vision, or it may involve turning completely about-face to see the back-facing hemisphere of vision. Of course, a limited version of both latter styles is simply looking ahead along a fixed visual axis, as with central or linear perspective.

Notice that both of these procedures, sphere-of-revolution, and sphere-of-vision, are enabled/enhanced by spatial immersion in the perspective object/image space. Today such immersion is no longer limited to the physical or real-world, but has been reproduced in Virtual/Augmented Reality simulation systems. VR is a type of perspective model of a region of the physical or artificial world.

A perspective model requires the formation of an integrated environment in which everything has its correct place or physical location, and in a way, it is a type of map in which everything exists at the same spatial scale. Other kinds of images form partial illusions of a 3-D space, or images of an artificial spatial reality, and this can be done with single-viewpoint and non-explorable stereoscopic images, movies, etc, but the degree of immersion is far less because one cannot explore a fully coherent world from any viewpoint. One cannot (yet) navigate around in a cinema or television projected 3-D movie!

So we can classify a perspective view/image by the degree of immersion it provides (actual or potential immersion). This is another way of stating that immersion leads to modelling of a spatial reality, which relies on the ability of the user of an image/view, to generate (mental/overt) mapping capabilities or spatial reference frames within which each object can be prescribed, ordered, indexed, measured/scaled, etc.

1,200 Types of Perspective

How many categories/forms of perspective have we thus far identified?

All in all, we have defined around 120 different kinds of perspective categories/forms and associated phenomena. And the number accelerates rapidly when we look at other factors. For example, we have not yet included over 500 known types of perspective instruments, plus related viewing, matching, calculating, representing methods, etc. All in all, it is easy to see how the number of perspective types can be listed in the several hundreds, and indeed we have spent 4 years diligently allowing the list to climb to 1,200 distinct types of perspective.

But what if there are so many different types of perspective, where does a list like this get us? How does it help us solve the many problems humanity faces in the 21st century? Well, it is the categorisation, and resultant taxonomy of types that encapsulates knowledge of the important distinctions between types, and hence points to which specific kind(s) of perspective is/are best suited to (or is/are inherently involved in) a particular real-world scenario, or could be adjusted to meet the needs of a problem/solution nexus.

Conclusions

The foundation of visual/optical/technical perspective is mathematics and in particular geometry; whereby this form of perspective deals with visual images/views that are comprised of standard mathematical structures such as points, lines, and regular solids.

Earlier we explained that humans interpret images in terms of measurement of structure or ordered Forms, because this is the only way we can deal with all the complexity/uncertainty of reality. This is how we cope with (in actuality) unknown shapes/sizes/locations/orientations of spatial objects in spatial reality. This issue relates to the correspondence/equivalence, scale/shape/size, and the shape/form sufficiency problem(s) mentioned earlier, whereby humans have no direct visual way of unambiguously interpreting an image of spatial reality (ref. its shape/size/location etc).

As an alternative, we can use our memory of a recognised object’s shape and size, and how it changes with viewpoint, to interpret its distance, aspect, or true shape. However, in general, interpretation of a perspective image/view relies on making certain assumptions about the level of order or structural order present in the object space and because that is a primary cause of the image itself (in combination with the projection method/imager employed).

In short, we represent disorder by using order. How so? Well, when looking at a spatial image our mind tries to map known shapes: being straight, regular curved lines, plus flat planes, and regular solids onto what it sees by recognising the same from known examples. For example, recognition of converging parallels, or warped images of squares, rectangles, and circles, etc. In other words, interpretation of a perspective image involves looking for, or seeking out, perspective degradation of regular forms, and including perspective frameworks and/or metric grids. And even highly irregular shapes are perceived in this way by testing or looking to see if the irregular shape is comprised of smaller regular shapes, or is in some way resting upon, or is aligned next to, a regular or flat plane for example; and from which we can gain an impression of location and/or size/physical shape, etc.

This is precisely where knowledge of perspective categories/forms (from a developed taxonomy of perspective) can help us (and our AI computer vision systems) recognise and know how object shapes are projected and seen using different kinds of perspective systems. A taxonomy of perspective can help us to develop accurate and precise shape grammars that will take human vision to the next level in terms of relevance and usefulness as applied to the complex visual problem spaces of the future.

In this respect, we can make a distinction between views made firsthand and images that are secondhand such as drawings, photographs, movies, etc. But as explained, perspective principles such as central perspective apply to both kinds of image, because the same geometric principles are in operation, thus we interpret images in terms of our experience of what the optics/geometry of a known object can, may, or will be.

Patently an image or view is a substitute for reality. A faithful picture operates in some sense as a surrogate for reality, that is a spatial object/scene. The surrogate must be relatively specific to the absent object, and there must be a close one-to-one relationship to the elements in the picture, and with respect to those same elements in the object space (approximately).

-- < ACKNOWLEDGMENTS > --

AUTHORS (PAGE / SECTION)

Alan Stuart Radley, April 2020 - 16th May 2025.

---

BIBLIOGRAPHY

Radley, A.S. (2023-2025) 'Perspective Category Theory'. Published on the Perspective Research Centre (PRC) website 2020 - 2025.

Radley, A.S. (2025) Perspective Monograph: 'The Art and Science of Optical Perspective', series of book(s) in preparation.

Radley, A.S. (2020-2025) the 'Dictionary of Perspective', book in preparation. The dictionary began as a card index system of perspective related definitions in the 1980s; before being transferred to a dBASE-3 database system on an IBM PC (1990s). Later the dictionary was made available on the web on the SUMS system (2002-2020). The current edition of the dictionary is a complete re-write of earlier editions, and is not sourced from the earlier (and now lost) editions.

---

Copyright © 2020-25 Alan Stuart Radley.

All rights are reserved.

You must be logged in to post a comment.